Abstract

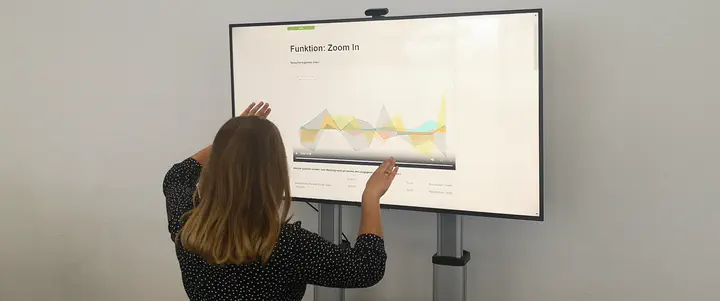

Multimodal interaction for visual data analysis and exploration provides new opportunities for empowering users to engage with data. However, it is not well understood which input modalities should be leveraged for certain information visualization (InfoVis) operations and how user would prefer to utilize them during data analysis and exploration. In order to close this research gap, we performed an user-elicitation study to examine how users utilize touch, speech, mid-air hand gestures and a combination of those for various InfoVis operations on large interactive displays. We believe this analysis will help us identify associated challenges and provide knowledge for the development of systems that provide multimodal interaction capabilities for visual data analysis and exploration.